It’s surprising that many companies still rely on manual data analysis despite advances in AI. OpenAI tackled this challenge head-on with an in-house data agent that processes massive datasets and delivers actionable insights in minutes. By harnessing the latest iterations of GPT-5, Codex, and advanced memory systems, this agent stands out in speed and reliability compared to traditional approaches.

This article outlines how OpenAI built this innovative tool from the ground up, the reasons behind key design decisions, and lessons learned from deploying it in real production environments.

What Foundation Concepts Underpin OpenAI’s Data Agent?

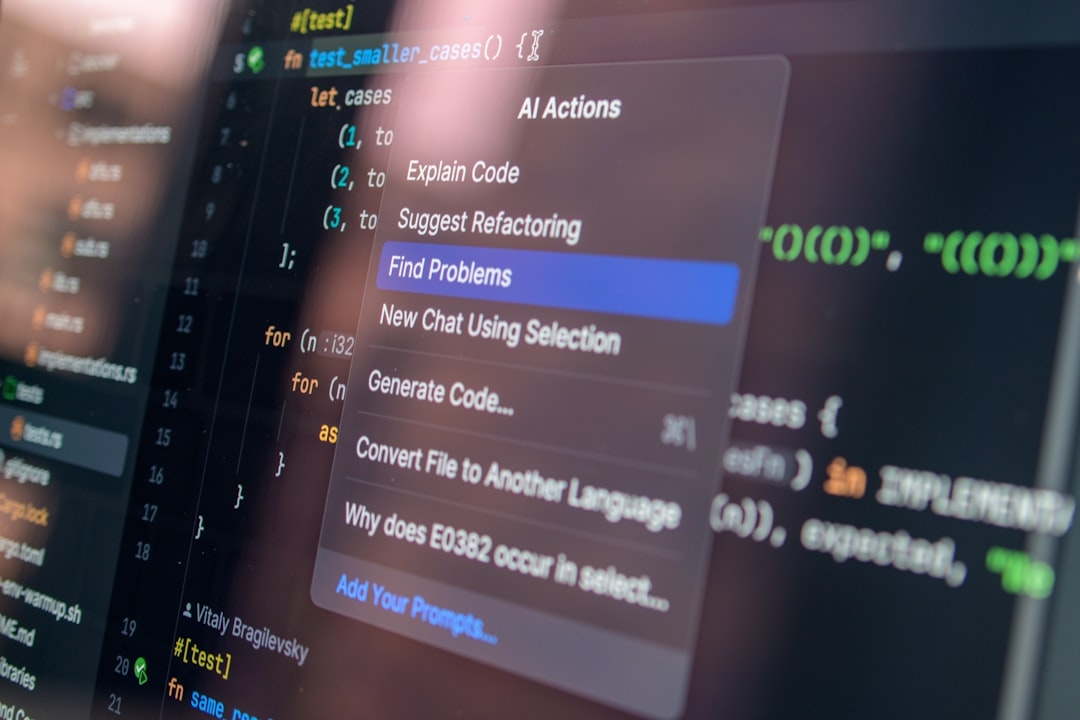

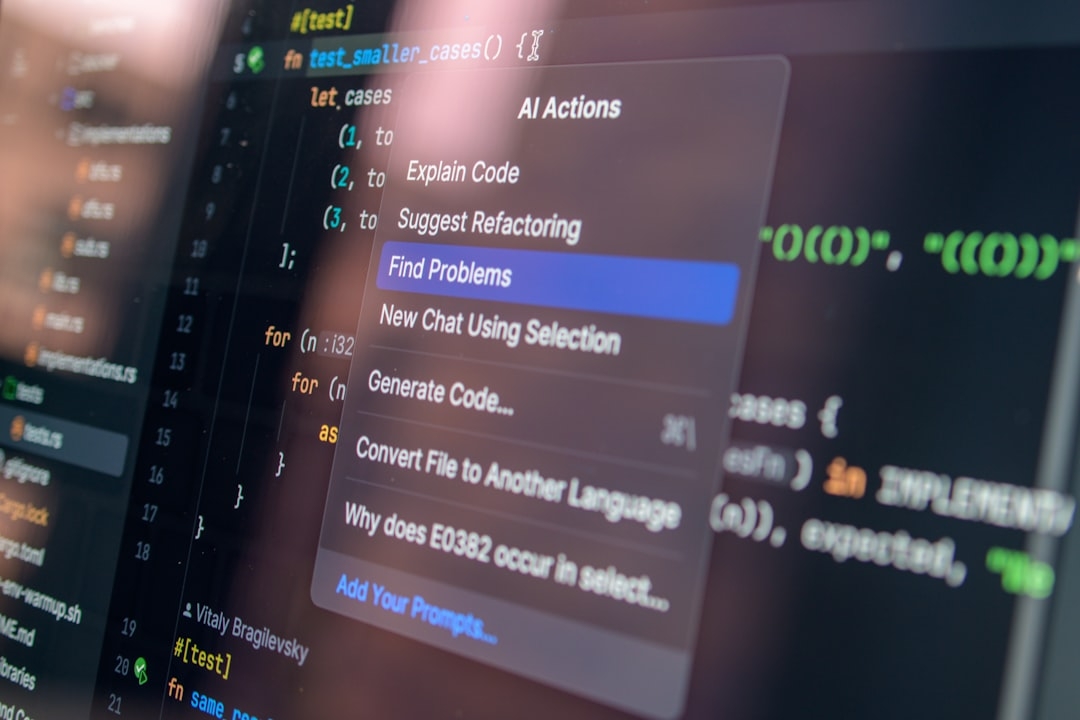

The core idea behind the data agent is to combine large language models (LLMs) with a memory system and code generation to reason over structured data. GPT-5 refers to OpenAI’s latest iteration of the general-purpose transformer model tuned for broader reasoning and understanding. Codex is a specialized AI that converts natural language queries into executable code.

Together, they enable the data agent to:

- Interpret complex natural language questions

- Generate and run code snippets to fetch and process data

- Remember context across multiple interactions to refine answers

This multi-component architecture solves one persistent problem: existing data tools often required users to know SQL or other query languages, limiting accessibility.

How Does OpenAI’s AI Data Agent Work?

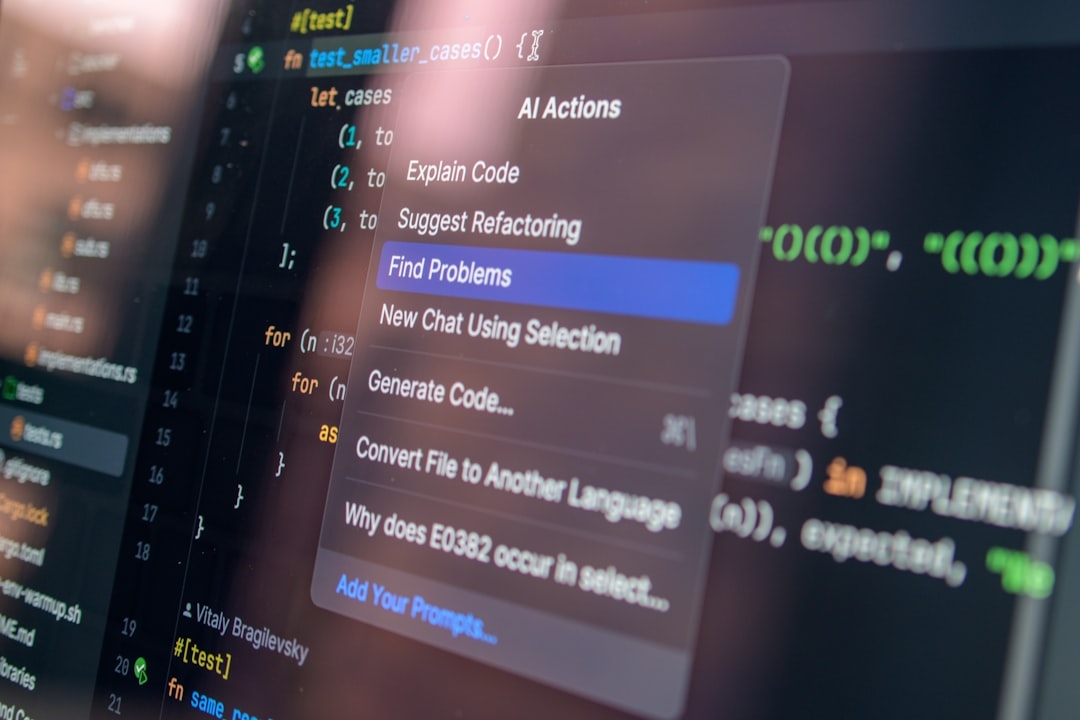

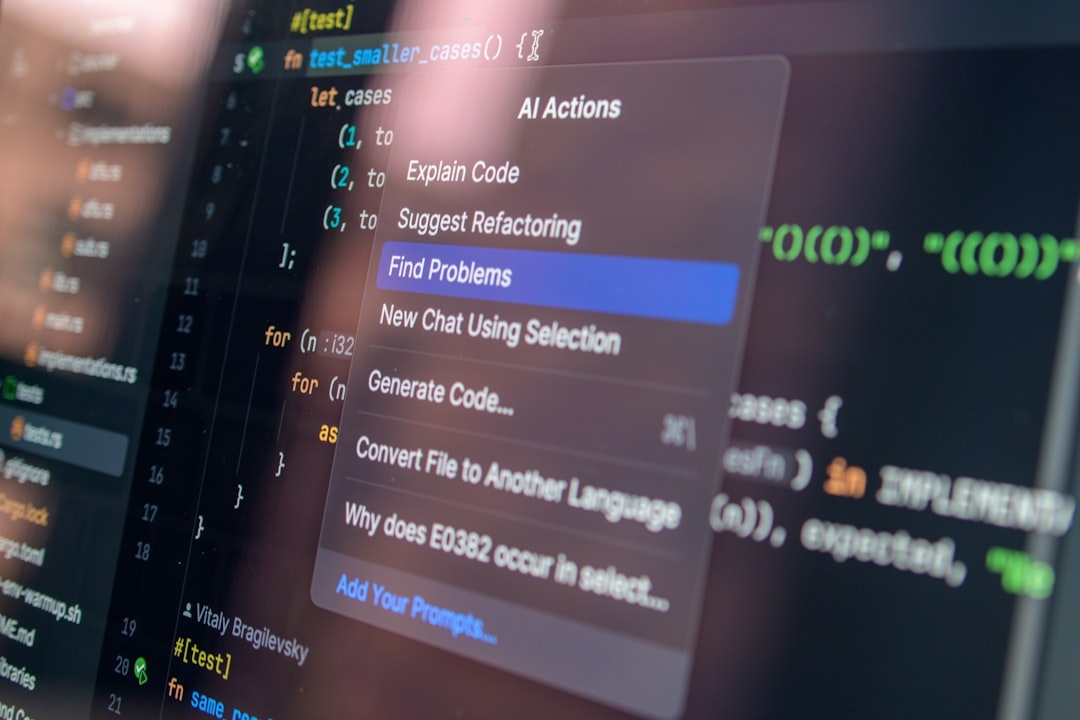

At its core, the agent listens for user queries expressed in plain English. It then triggers Codex to generate the necessary code that can extract relevant data segments, such as filtering or aggregations, from large datasets. GPT-5 handles the reasoning part, understanding the intent and verifying results.

The memory component stores previous queries and intermediate data, allowing the agent to handle follow-up questions without starting from scratch. This is vital for sessions with evolving queries.

One key insight from OpenAI’s development experience was balancing code generation with caching data outputs. If the agent runs costly computations repeatedly, it slows down. So, intermediate results are saved for reuse, accelerating subsequent queries.

Why Use Both GPT-5 and Codex?

GPT-5 excels at language understanding and complex reasoning but isn't optimized to produce fully executable code reliably. Codex, trained expressly for programming tasks, generates high-quality code needed to manipulate the data.

Splitting these roles leverages each model’s strengths. GPT-5 conceptualizes the problem, while Codex performs the execution.

When Should You Use an AI Data Agent Like This?

AI data agents shine in scenarios where teams need quick, accurate answers from vast, evolving datasets without requiring deep technical skills. This includes business intelligence, scientific research, and operational analytics.

From OpenAI’s experience, these agents outperform conventional BI tools when questions are less structured or exploratory by nature.

However, there are trade-offs. For mission-critical use cases demanding strict accuracy or audits, traditional query languages and human validation are often preferred because AI-driven code generation can sometimes produce unexpected or suboptimal queries.

What Were the Production Challenges?

During deployment, OpenAI faced several issues common to AI-integration projects:

- Latency: Generating code and running it on huge datasets can introduce delays. Caching and partial precomputation helped mitigate this.

- Reliability: The agent had to detect when outputs don’t align with user intent or when code snippets might cause errors.

- Context management: Ensuring the memory component maintained relevant context without bloating or confusion.

Extensive testing and monitoring frameworks were crucial to maintain trustworthiness and performance.

What Are Advanced Patterns in This System?

OpenAI incorporated iterative refinement, where the agent evaluates its initial answers and adjusts code generation or queries dynamically. It also supports multi-modal inputs combining tables, text, and metadata to enrich reasoning.

Moreover, the system leverages an internal feedback loop: user interactions inform model updates and fine-tuning to improve accuracy over time.

Quick Reference: Key Takeaways

- The combination of GPT-5, Codex, and memory builds a powerful AI data agent for massive data analysis.

- Splitting responsibilities between language understanding (GPT-5) and code generation (Codex) is vital.

- Memory management is critical to handle complex multi-turn queries efficiently.

- Production challenges include latency, code reliability, and context preservation.

- This approach excels in exploratory data analysis but may not replace traditional BI in regulated environments.

How Can You Evaluate If an AI Data Agent Fits Your Needs?

Start by mapping your data complexity and query variability. If your users need rapid insights without coding skills, AI agents can boost productivity.

Next, assess your tolerance for occasional inaccuracies versus speed. Implement pilot projects focusing on monitoring and iterative refinement—key lessons from OpenAI’s journey.

The trade-offs are between empowerment and control. AI agents reduce barriers but require mature operational practices for safe deployment.

Conclusion

OpenAI’s internal data agent, powered by GPT-5 and Codex, offers a new paradigm for interacting with massive datasets—speeding up analysis and broadening access beyond technical experts. Its design lessons emphasize the power of combining AI capabilities with smart memory and caching strategies.

While not a universal replacement for existing tools, this approach opens new possibilities for businesses aiming to democratize data insights. By understanding the trade-offs and real-world challenges OpenAI faced, organizations can better judge where to apply these agents effectively.

If you want to explore this further, try defining a quick evaluation framework. In 10-20 minutes, list your data needs, user skill levels, and accuracy requirements. This will guide you on whether adopting an AI-powered data agent makes sense for your environment.

Technical Terms

Glossary terms mentioned in this article

Comments

Be the first to comment

Be the first to comment

Your opinions are valuable to us