Have you wondered why Meta recently paused teen access to its AI characters across all its apps? This decision raises questions about the balance between innovation and safety in AI-powered social interactions. Meta's move highlights the challenges companies face when deploying advanced AI experiences, especially for younger audiences.

The development of AI characters—virtual entities powered by artificial intelligence that interact with users conversationally—has become a growing trend. While offering engaging and personalized interactions, these AI characters also introduce risks, particularly for teenagers who may be more vulnerable to misleading or inappropriate content.

What Led Meta to Pause Teen Access to AI Characters?

Meta announced a global pause on teen access to its AI-driven characters in all its apps. The motive behind this move is not to abandon AI development but to prioritize safety while an updated and improved version is being developed. This cautious approach indicates Meta’s recognition of potential shortcomings or risks in the current AI implementations when it comes to underage users.

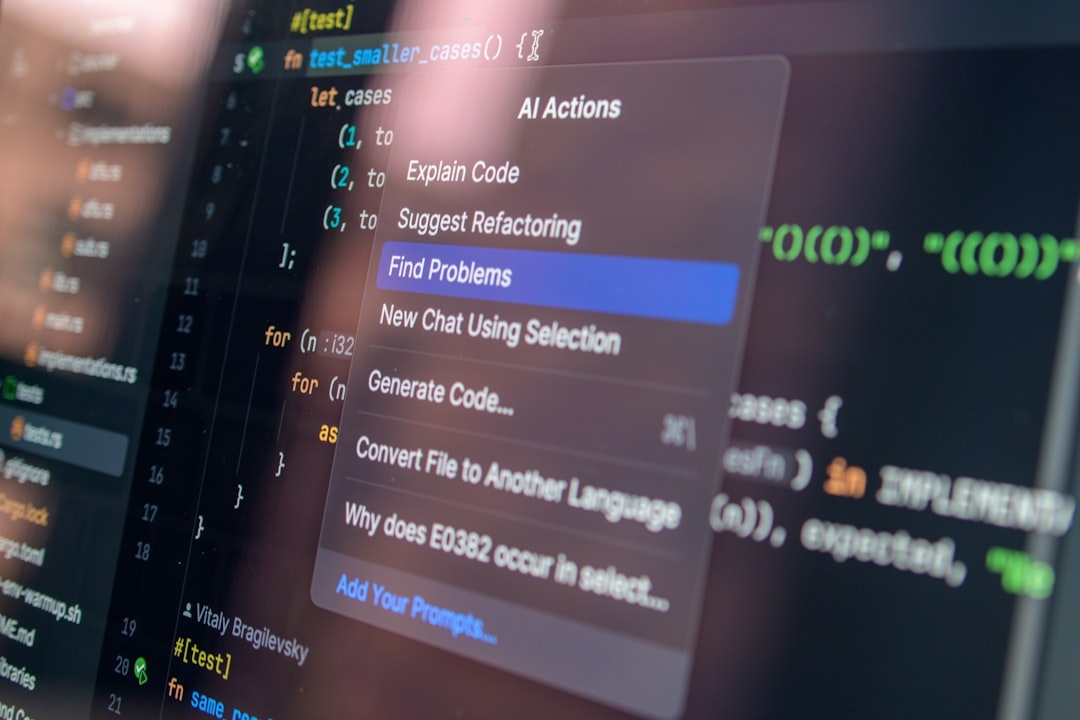

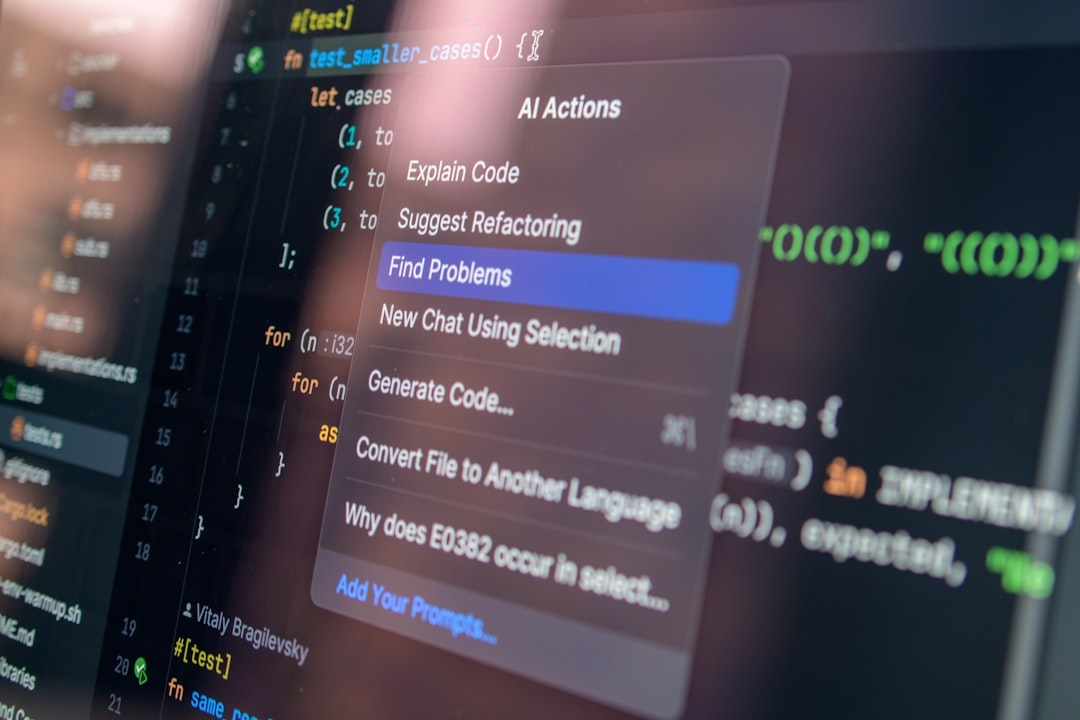

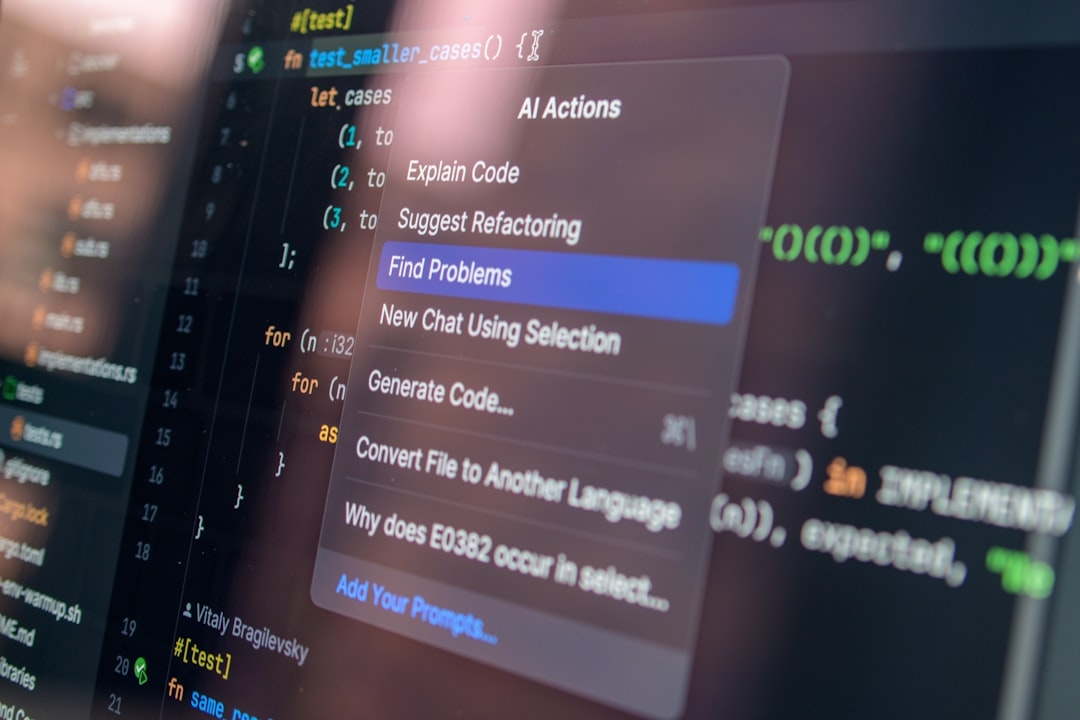

AI characters refer to conversational systems that use natural language processing and machine learning to simulate human-like interactions. These systems can range from simple chatbots to complex personalities capable of nuanced dialogue.

How Does Meta’s Pause Affect Teens and Users Globally?

For teens, this pause means they will no longer have access to AI characters on platforms where they previously could interact with these AI-driven personas. This step helps reduce the chance of exposure to unmoderated or unforeseen responses particularly problematic in the case of AI, which can sometimes generate unexpected or inappropriate replies.

This action protects younger users but may cause frustration for teens who valued these programmed companions for entertainment or social interaction. For parents and guardians, the move offers reassurance that Meta is taking steps to mitigate risks associated with AI conversational agents.

How Does Meta Plan to Improve the AI Experience for Teens?

Meta emphasizes it is not stepping back from AI innovation. Instead, it is investing in building a safer, updated version of AI characters tailored to younger users. This involves refining AI safety protocols, improving moderation techniques, and ensuring the AI adheres to stricter content guidelines.

Natural Language Processing (NLP), a core technology behind these characters, is continuously being enhanced to better understand context, tone, and potential risks in conversations, especially to prevent harmful interactions.

What Are the Practical Considerations Behind This Decision?

Several real-world constraints influence why paused access is a practical step:

- Risk management: AI models are not perfect and can unintentionally produce inappropriate or misleading content, which is especially critical to control when users are minors.

- Regulatory environment: Privacy laws and child protection regulations put additional pressure on companies to ensure AI tools are safe for teen use.

- Technical complexity: Creating AI that is simultaneously engaging, safe, and compliant with global standards requires significant investment in research and development.

- Trust and brand reputation: Missteps in AI behavior can harm a company’s image and erode user trust, particularly if younger users are affected.

Is Pausing Teen Access a Common Industry Practice?

Echoing broader trends, companies working with AI-powered apps often take precautionary steps to protect vulnerable users. Pausing or restricting access is not unusual while improvements are implemented. The technology’s evolving nature leads to ongoing balancing acts between innovation and responsible deployment.

What Should Users and Parents Keep in Mind?

Understanding these nuances can help users and guardians make informed decisions:

- Be aware that AI characters, while entertaining, are still automated systems prone to errors or biases.

- Reinforce critical thinking when engaging with AI responses, especially for teens.

- Stay updated on platform changes and moderation policies to protect user safety.

- Consider opting for AI tools with robust safety features or parental controls.

Practical Considerations: What Are the Trade-offs?

Meta’s temporary pause reveals trade-offs companies face when deploying AI characters at scale:

- Time: Developing safer AI takes time, delaying feature availability.

- Cost: Investing in improved algorithms and content moderation means higher expenses.

- Risk: Early releases might expose users to harmful content or privacy risks.

- Constraints: Navigating global laws and diverse cultural norms complicates deployment.

In real-world deployments, these factors demand a pragmatic, iterative approach instead of rushed rollouts.

How Can You Evaluate AI Safety for Teen Use Quickly?

If you're considering AI character access or developing similar tools, here’s a 10-20 minute quick evaluation framework:

- Identify the intended user group and their vulnerability level.

- Review available safety and content moderation measures in place.

- Assess transparency: Does the AI disclose it’s an automated system?

- Check for parental control or user feedback options.

- Evaluate compliance with relevant regulations (e.g., COPPA, GDPR).

- Examine past incident reports or user complaints if publicly available.

Applying these criteria helps quickly gauge the suitability of AI characters for teens in any given context.

Final Thoughts

Meta’s decision to pause teen access to AI characters is a cautious, pragmatic reaction to the real challenges posed by AI in social applications. It signals an acknowledgment that AI safety—especially for younger audiences—cannot be compromised in the rush to innovate.

While this move may disappoint some users, it underscores the value of balancing technical possibilities with ethical responsibilities. By prioritizing safety, Meta aims to return with a superior AI experience that users can trust.

Technical Terms

Glossary terms mentioned in this article

Comments

Be the first to comment

Be the first to comment

Your opinions are valuable to us