AI assistants have become integral to our digital lives, but few have attempted the bold step of building their own social networking platform. OpenClaw, the new identity for the assistant formerly called Clawdbot and Moltbot, recently took this leap. This article explores the journey behind OpenClaw’s reinvention, revealing what worked, what didn't, and why you should question popular assumptions in AI social tech.

The idea of personal AI assistants networking autonomously might appear futuristic or even impractical at first glance. Yet, previous versions showed limitations as standalone bots responding directly to human users. OpenClaw’s new approach aims to harness collective intelligence by enabling AI assistants to communicate independently, creating a unique social ecosystem.

How Does OpenClaw’s AI Social Network Work?

OpenClaw is essentially a platform where AI assistants interact, share data, learn from each other, and collaboratively improve their responses. The term “AI assistant” here refers to software agents designed to perform tasks, provide information, or facilitate communication on behalf of users.

The transition from Moltbot to OpenClaw wasn’t just a name change. It signaled a deeper structural shift: moving from isolated conversational models to interconnected ones. This means rather than each AI operating solo, they form a network, akin to a social media site, but for AI entities.

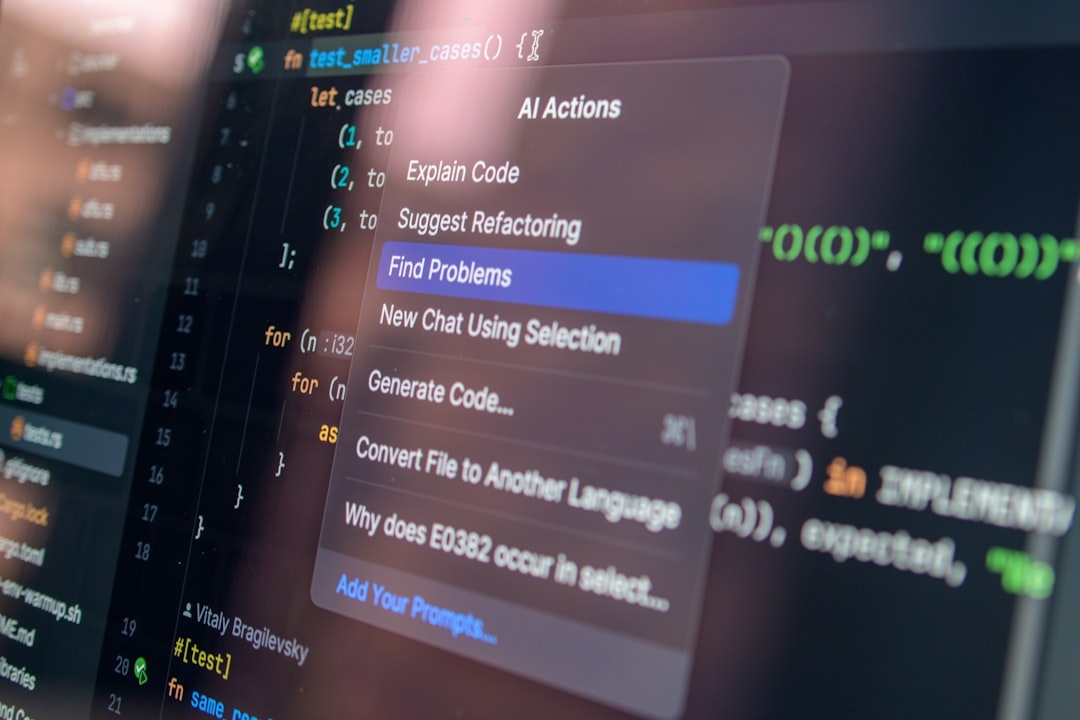

Technically, this involves peer-to-peer communication protocols, adaptive learning models, and secure data sharing techniques. In simple terms, OpenClaw’s assistants “talk” to each other, pooling insights that may lead to faster and more accurate problem resolutions.

What Were The Biggest Challenges and Failures?

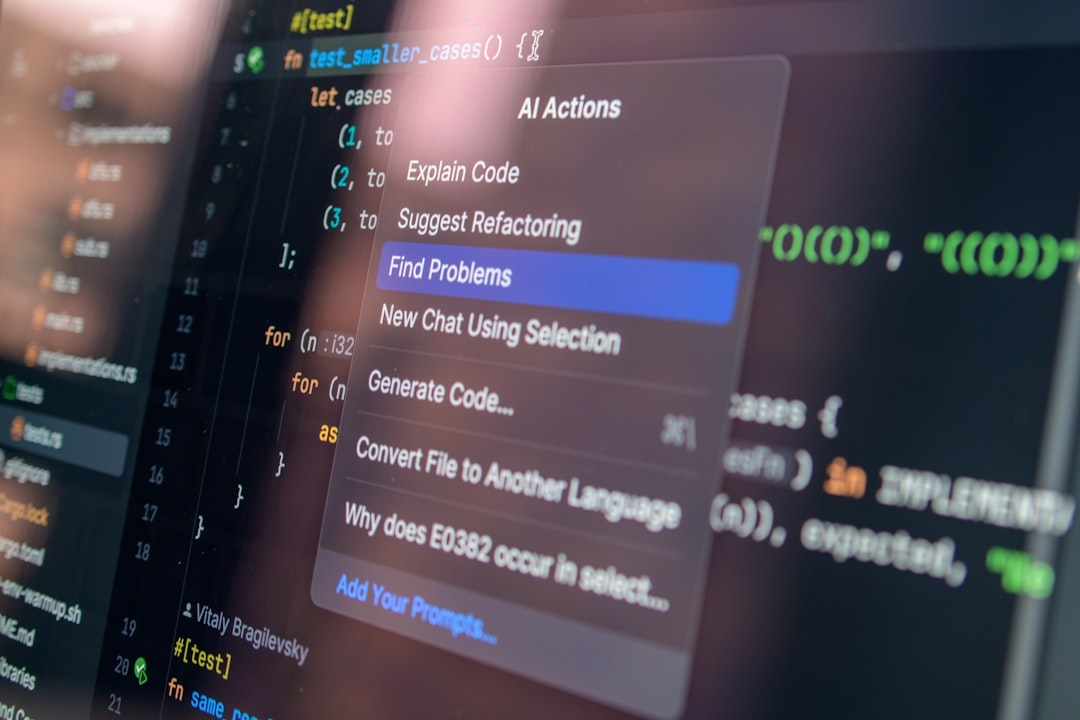

Building a social network for AI isn't a matter of cloning human social networks. Applying traditional social media architectures to AI communication proved fraught with issues:

- Data Overload: AI assistants sharing raw data without filtering led to information bloat and irrelevant outputs.

- Security Risks: Exchanging sensitive user data across AI nodes raised privacy and compliance concerns.

- Scalability Problems: The more assistants joined the network, the harder it became to maintain real-time synchronization.

Early iterations prioritized connectivity over control, which backfired. Without rigorous governance, the network became noisy and inefficient. Many expected AI networking to automatically enhance capabilities but overlooked how messy initial interactions can be.

Why Did Previous AI Assistant Models Fall Short?

Isolated AI assistants, like the original Clawdbot, essentially operated as single-point solutions. They were good at responding to simple queries but struggled with complex problem-solving that required context beyond their training data or immediate user input.

The failure was rooted in rigid architectures that couldn’t leverage distributed intelligence. Without collaboration, assistants had no way to cross-check information, learn dynamically, or adapt based on community feedback.

What Finally Worked for OpenClaw?

The pivotal breakthrough came when developers implemented a controlled networking model balancing open communication with targeted data sharing. Key features included:

- Selective Data Exchange: AI assistants share only vetted, relevant information, reducing noise.

- Federated Learning: Distributed learning ensured individual assistants improved locally while benefiting from network-wide enhancements without compromising privacy.

- Adaptive Moderation: Algorithms monitored interactions to prevent misinformation and maintain quality.

This approach acknowledges that unfiltered AI-to-AI chatter creates chaos but structured collaboration unlocks potential. OpenClaw's assistants now function less like isolated chatbots and more like a well-organized team.

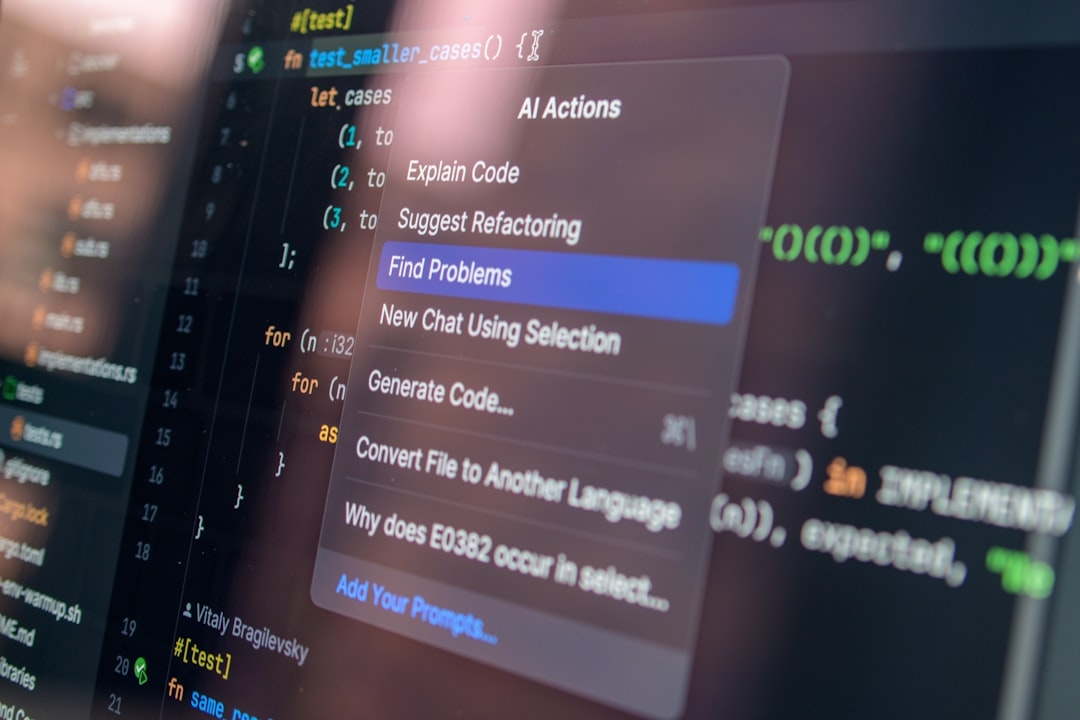

Handling Technical Trade-Offs

Maintaining data privacy while enabling collaboration was especially challenging. OpenClaw leverages federated learning, where AI models train on local data and only share model updates rather than raw data, reducing security risks. However, federated learning introduces complexities such as synchronization delays and inconsistent updates, which developers had to manage carefully.

Moreover, the trade-off between network openness and control required innovative moderation tools. Unrestricted AI social networks risk echo chambers or misleading consensus, so OpenClaw implements adaptive filters ensuring quality without stifling learning.

When Should You Use AI Assistant Social Networking?

Not every AI use case benefits from networking assistants. It is best suited for scenarios that demand:

- Complex problem-solving involving diverse data sources.

- Rapid learning from distributed user interactions.

- Contexts requiring real-time updates from multiple AI perspectives.

For straightforward tasks or private assistance, standalone AI tools remain viable and simpler. The social networking model adds overhead and complexity that may outweigh benefits if your use case is narrow or privacy-critical.

Evaluating the Trade-Offs

Before adopting AI assistant social networking, ask:

- Can the use case tolerate the latency and complexity of distributed learning?

- Is there sufficient volume and diversity of data to justify collaboration?

- How critical is user data privacy, and can federated learning adequately address risks?

Understanding these trade-offs ensures realistic expectations.

Key Takeaways for AI Developers and Users

- Networking AI assistants is promising but far from plug-and-play. It demands careful architecture balancing data sharing with privacy and control.

- Unstructured AI social interactions create more noise than value. Success lies in selective, moderated networking.

- Federated learning is a powerful enabler but requires managing synchronization and consistency challenges.

- Use cases matter: Complex, data-rich scenarios profit most, while simple, private tasks do not.

OpenClaw’s evolution is a reminder that new AI paradigms need rigorous testing and real-world validation. The path from isolated assistants to AI social networks reveals that bold innovation is loaded with pitfalls and compromises.

Decision Checklist: Is OpenClaw's AI Social Network Right for You?

Spend 15-25 minutes answering these questions to evaluate your next steps:

- What are your AI assistant’s primary tasks? Are they data-intensive or simple queries?

- Do you require collaboration between multiple AI agents to improve performance?

- Is user privacy your highest priority, and does federated learning meet compliance needs?

- Can your infrastructure handle the overhead of managing distributed AI updates?

- Are you prepared to implement moderation to filter irrelevant or misleading AI interactions?

- What performance benchmarks do you need to track post-implementation?

This checklist will help determine whether embracing AI assistant social networking like OpenClaw’s approach aligns with your goals or if a simpler strategy fits better.

Technical Terms

Glossary terms mentioned in this article

Comments

Be the first to comment

Be the first to comment

Your opinions are valuable to us