Have you ever wondered how the Codex CLI coordinates complex AI workflows efficiently? The secret lies in the Codex agent loop, a mechanism that orchestrates models, tools, prompts, and performance seamlessly via the Responses API. Understanding this loop is essential for anyone working with Codex-based systems, especially for balancing speed, accuracy, and resource use.

This article unpacks the Codex agent loop from direct experience, explaining its components and how they work together. Whether you’re a developer, AI practitioner, or just curious about modern AI orchestration, this breakdown shows how Codex CLI manages tasks and why it matters.

What Is the Codex Agent Loop and Why Does It Matter?

The Codex agent loop is a continuous process within the Codex CLI that controls how AI models interact with tools and prompts to produce outputs. It uses the Responses API to handle interactions asynchronously and optimize performance. In essence, it acts as the conductor of an orchestra, ensuring each part—model, prompt, tool—plays in harmony.

This loop is critical because it enables complex reasoning chains, tool usage, and model switches without manual intervention. But running such an orchestration isn't trivial—it requires balancing latency, accuracy, and system overhead.

How Does the Codex Agent Loop Work?

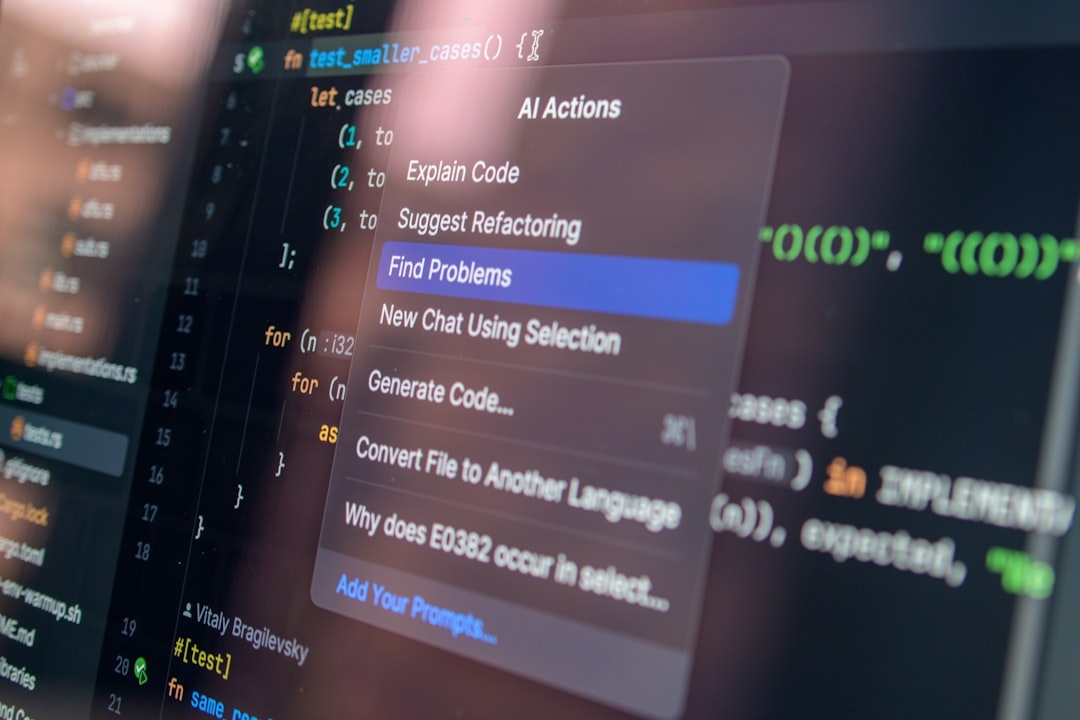

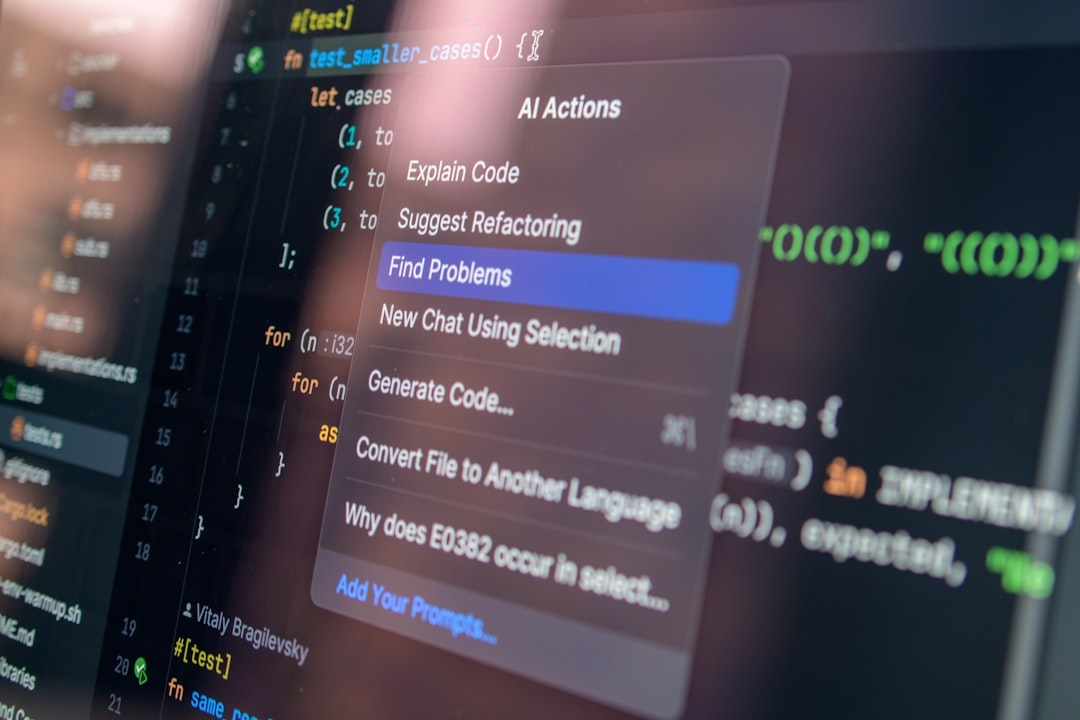

At its core, the loop continuously:

- Receives model outputs

- Decides what tools or prompts should run next

- Processes those tools or prompts asynchronously via the Responses API

- Updates state and repeats until task completion

The Responses API is a key technical component that allows non-blocking calls and feedback integration. This asynchronous design helps maintain smooth workflows even when tools have varying execution times.

Think of the loop as a refined assembly line in a factory. Each stage (model inference, tool action, prompt generation) feeds the next, and the Responses API is the conveyor system ensuring parts don’t pile up or wait unnecessarily.

When Should You Use the Codex Agent Loop?

The loop shines in situations where tasks are dynamic and require diverse toolsets or iterative reasoning. For example:

- Automated coding assistants combining multiple APIs

- Complex information retrieval fused with language models

- Multi-step workflows requiring adaptive logic

However, the loop introduces complexity and overhead. For simple single-step prompt calls, it might be overkill and slower.

From experience, some assumptions around scripting everything rigidly into the loop often cause delays or debugging headaches. It’s best suited for scenarios demanding flexible, ongoing orchestration rather than one-off calls.

What Are the Main Trade-Offs?

While powerful, the agent loop isn’t flawless. Key trade-offs include:

- Latency vs. Flexibility: The asynchronous Responses API lets you handle multiple concurrent tasks but can add latency compared to direct sync calls.

- Complexity vs. Control: Managing orchestration code increases codebase complexity but gives fine control over task execution.

- Resource Use: Running multiple tools and models concurrently demands more compute and memory, which might be costly.

Understanding these trade-offs is crucial before adopting the loop in production. Blindly assuming it improves every workflow leads to wasted resources and longer debug cycles.

How to Implement and Optimize the Codex Agent Loop

Pragmatically, implementing the agent loop involves:

- Defining clear task states and transitions to prevent infinite loops

- Using the Responses API to trigger asynchronous calls and track completions

- Leveraging built-in prompt templates for standardizing interactions

- Handling errors and timeouts gracefully to maintain stability

From direct deployment experience, it helps to monitor execution traces and performance metrics actively. Pinpointing bottlenecks often leads to adjusting concurrency levels or caching intermediate results.

Another tip: keep your tool interfaces as stateless as possible to reduce side effects. This simplifies retry logic and error handling within the agent loop.

Real-World Scenarios and Lessons Learned

In one project automating report generation with live data fetching, the Codex agent loop managed fetching, parsing, and text generation steps. While the orchestration reduced manual syncs, unexpected API latency sometimes stalled the loop. Optimizing tool timeouts and implementing fallback prompts improved reliability.

Another case involved chatbot services using the loop for real-time multi-turn conversation management. Here, the loop handled switching between knowledge bases and APIs. The lesson: design your orchestration for graceful degradation when external services become unresponsive.

Quick Reference: Key Takeaways

- Codex agent loop orchestrates AI models, prompts, and tools asynchronously using the Responses API.

- Best suited for complex, multi-step workflows needing flexibility and adaptive reasoning.

- Trade-offs involve latency, complexity, and resource use—avoid over-engineering simple tasks.

- Implementation requires clear state management, error handling, and performance monitoring.

- Tools should be stateless and designed for reliable retries.

Decision Checklist: Should You Use the Codex Agent Loop?

Answer the following in 15-25 minutes to decide if this orchestration fits your needs:

- Is your workflow multi-step and requires dynamic tool or model selection?

- Do you need asynchronous processing to handle variable latency tools?

- Can your system tolerate added orchestration complexity?

- Are your resources sufficient to manage concurrent tooling and model execution?

- Do you have monitoring in place to detect and respond to process stalls or failures?

If you answered yes to most, the Codex agent loop is worth exploring. Otherwise, consider simpler synchronous calls or scripted sequences.

Understanding the Codex agent loop’s strengths and limitations helps you leverage its orchestration power effectively. Approach your implementation with pragmatism and clear performance goals to avoid pitfalls witnessed firsthand in production environments.

Technical Terms

Glossary terms mentioned in this article

Comments

Be the first to comment

Be the first to comment

Your opinions are valuable to us