When I first encountered LiveKit’s technology integrated into OpenAI’s ChatGPT voice mode, I was impressed by how smoothly conversations flowed, almost like chatting with a person rather than a machine. This breakthrough reflects years of dedicated development by LiveKit, a startup now valued at $1 billion after a significant funding round.

The evolution of voice AI engines is transforming how people interact with technology. LiveKit’s growth highlights the increasing importance of voice-enabled AI in everyday applications such as virtual assistants, customer service, and accessibility tools.

What Is LiveKit and Why Does It Matter?

LiveKit is a specialized voice AI engine designed to enable natural, real-time voice communication powered by advanced speech recognition and synthesis technologies. Founded five years ago, it has become a key player in the AI landscape by partnering with OpenAI to power the voice mode in ChatGPT.

The company's recent $100 million funding round led by Index Ventures pushed its valuation to $1 billion, underscoring investor confidence in voice AI's future. This milestone also reflects LiveKit's role in pioneering voice interfaces that bring AI conversations closer to human experience.

Understanding Voice AI Engines

Voice AI engines comprise technologies like automatic speech recognition (ASR), which converts spoken language into text, and text-to-speech (TTS), which converts text back into human-like speech. LiveKit integrates both to enable users to talk and listen seamlessly through ChatGPT.

Such engines rely on deep learning models trained on vast datasets to interpret accents, intonations, and context accurately. Achieving low latency and high accuracy simultaneously is a challenging technical feat that LiveKit addresses effectively.

How Does LiveKit Power ChatGPT’s Voice Mode?

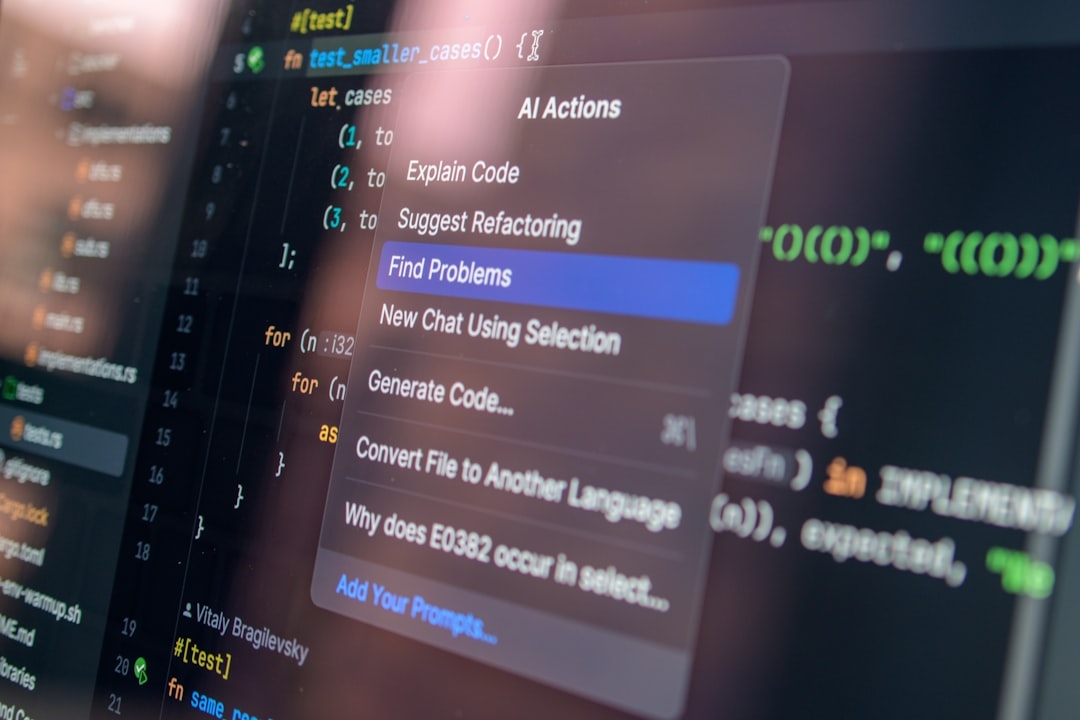

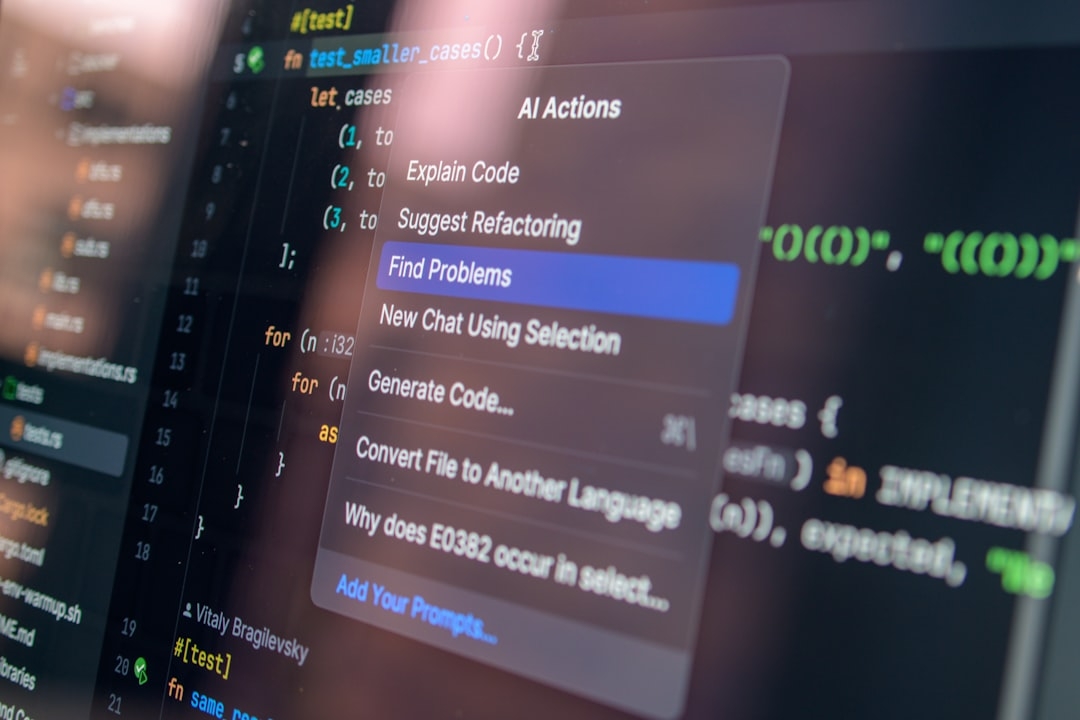

At its core, LiveKit processes audio input from users, converts speech into text (ASR), feeds this text to ChatGPT’s language model, then converts the AI-generated text back into spoken words (TTS) for the user. This cycle happens in near real-time, enabling fluid conversations.

Key to this performance is LiveKit’s scalable cloud infrastructure and optimized machine learning models that balance speed and quality. It ensures the voice experience sounds natural, maintaining tonal nuances and minimizing robotic effects often criticized in older systems.

Can Anyone Easily Use Voice AI Like LiveKit?

While voice AI is growing popular, common misconceptions include thinking these engines work perfectly out-of-the-box or handle every accent flawlessly. In reality, even leading solutions like LiveKit must continuously refine their models based on user data and feedback.

Latency issues, background noise, or ambiguous speech can still degrade performance. Thus, deploying voice AI in production requires ongoing tuning and monitoring.

Comparison: LiveKit vs. Other Voice AI Providers

| Feature | LiveKit | Competitors |

|---|---|---|

| Integration with OpenAI | Exclusive partner powering ChatGPT voice mode | Varies; few direct OpenAI partnerships |

| Latency | Low, optimized for real-time conversation | Often higher; some delays noticeable |

| Speech Recognition Accuracy | High, supports multiple accents and contexts | Varies; often less tuned for complex inputs |

| Text-to-Speech Naturalness | Highly natural, expressive voices | Mixed; some sound robotic |

| Funding and Valuation | $100M round, $1B valuation | Varies; many smaller startups or established players |

What Challenges Does Voice AI Still Face?

Despite advances, voice AI engines like LiveKit encounter several hurdles:

- Ambient Noise: Background sounds can interfere with accurate speech recognition, requiring sophisticated noise cancellation.

- Context Understanding: Voice AI struggles with ambiguous phrases or slang, unlike humans who infer context easily.

- Latency vs. Quality: Striking a balance between fast responses and natural-sounding speech necessitates trade-offs.

- Privacy Concerns: Processing voice data raises security and data protection considerations, especially with cloud services.

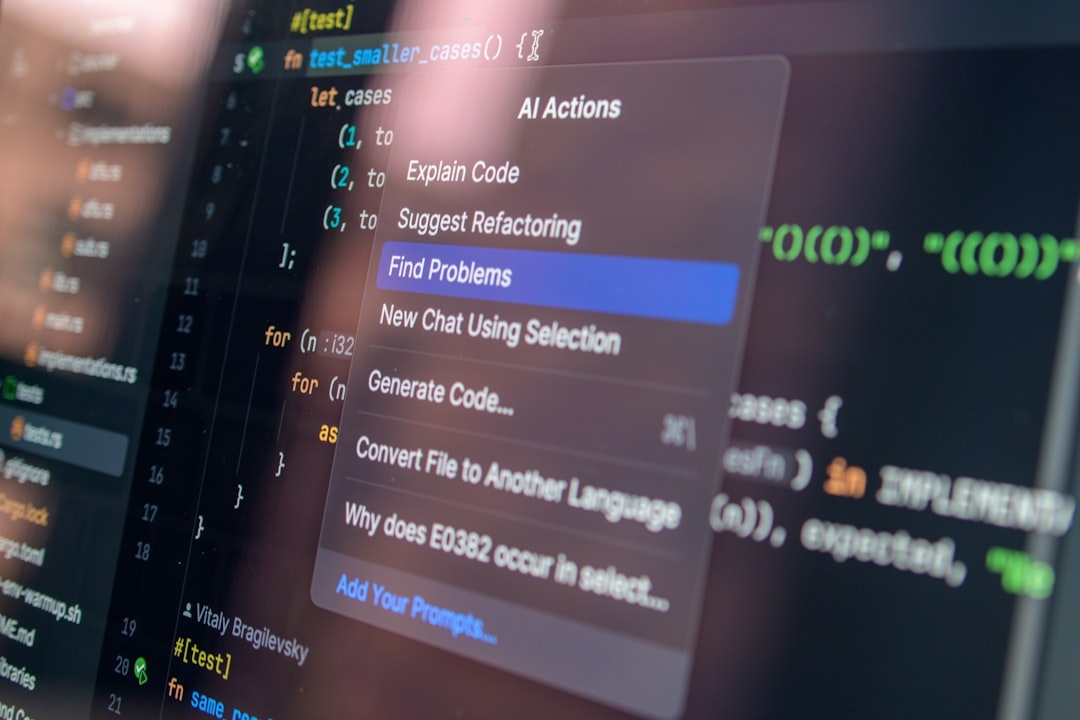

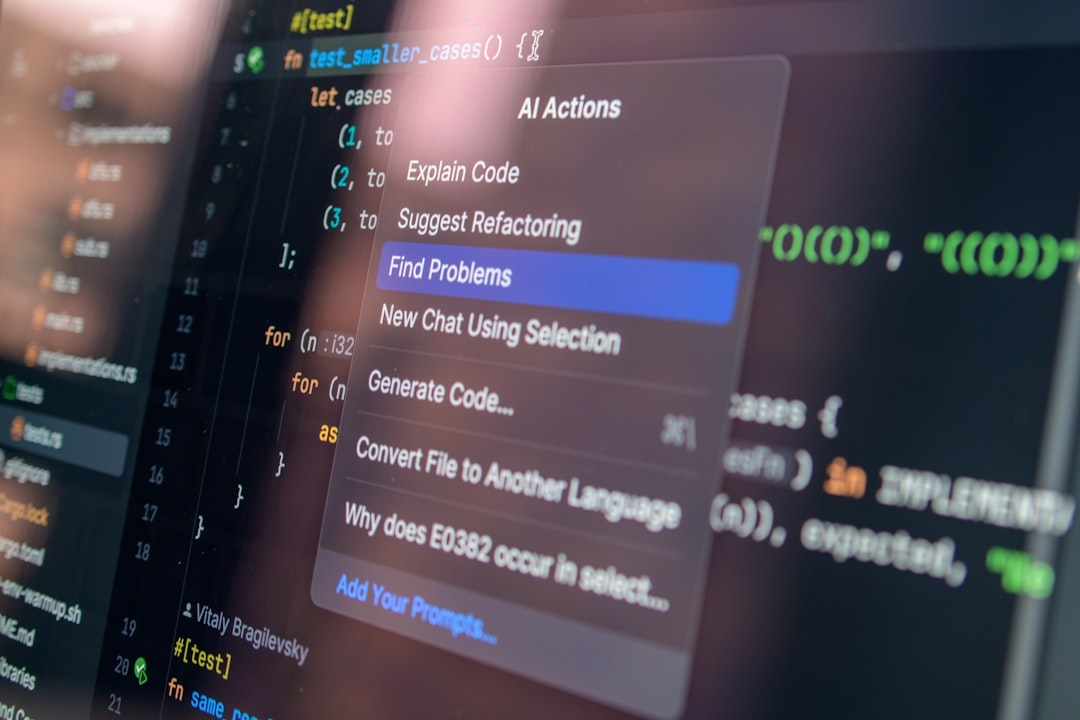

How Can Developers Start Experimenting with Voice AI?

For those interested in exploring voice AI firsthand, many services, including LiveKit, offer SDKs or APIs enabling rapid prototyping. The key is integrating ASR and TTS components fluently.

Beginners should focus on understanding latency impacts and test with varied speech samples to gauge accuracy across accents and environments. Evaluating user experience critically aids in refining applications.

Expert Tip: Start Small, Iterate Fast

Voice AI projects often fail when developers assume perfect accuracy from the outset. Instead, gather user feedback in early stages and improve models progressively. Pay close attention to edge cases like homonyms or overlapping speech.

Experimentation platforms like LiveKit offer valuable tools to balance speed and performance without reinventing foundational speech technology.

What Is Next For LiveKit and Voice AI?

LiveKit’s $1 billion valuation marks a significant step toward mass adoption of voice-enabled AI. As OpenAI continues enhancing conversational AI, technologies like LiveKit enable seamless human-computer interactions beyond typing.

While complete naturalness remains elusive, continuous improvements in machine learning models and infrastructure will expand voice AI’s scope—from accessibility enhancements to hands-free computing becoming everyday norms.

Concrete Experiment: Try Your Own Voice AI Demo

In the next 20 minutes, test an existing voice AI integration such as ChatGPT’s voice mode powered by LiveKit. Notice how the system handles your accent, speed of response, and background noise. Record your observations on what feels natural and where it struggles.

This practical test helps understand real-world limitations and strengths of cutting-edge voice AI engines like LiveKit.

Technical Terms

Glossary terms mentioned in this article

Comments

Be the first to comment

Be the first to comment

Your opinions are valuable to us