Scaling applications that serve hundreds of millions of users is never straightforward. When OpenAI needed to support 800 million ChatGPT users, the challenge didn’t lie solely in AI model optimization but also in the database layer powering the service. PostgreSQL, a popular open-source relational database, was at the core of this effort. However, scaling it to handle millions of queries per second required precise engineering and architectural ingenuity.

This article explores the concrete strategies OpenAI used to scale PostgreSQL effectively to meet ChatGPT’s unprecedented demand. Whether you’re a developer, system architect, or tech enthusiast, understanding these trade-offs and techniques sheds light on what it takes to power applications at internet scale.

How Does PostgreSQL Handle Millions of Queries?

PostgreSQL is well-known for reliability and robustness, but conventional setups aren’t built to handle millions of queries per second out of the box. OpenAI approached this by deploying multiple replicas of the database cluster. Replicas allow the system to distribute read queries across several nodes, improving throughput and resilience.

However, replicas alone don’t solve all problems. To prevent replicas from being overwhelmed, OpenAI implemented rate limiting at various layers. Rate limiting controls how many requests a service accepts over time, protecting the database from sudden spikes that could degrade performance or cause outages.

Another key method was introducing extensive caching layers. These caches hold frequently requested data closer to the application, reducing the load on PostgreSQL itself. By minimizing redundant queries, caching boosts overall system efficiency.

What Is Workload Isolation and Why Does It Matter?

Workload isolation refers to separating different types of database tasks so they don’t compete for resources. Within OpenAI’s architecture, they isolated write-heavy operations (which change data) from read-heavy activities (which only fetch data). This separation helps maintain consistent performance because write operations often require more computational resources and locking mechanisms.

For instance, administrative or background jobs were run on different database instances than the main ChatGPT queries. This way, heavy analytics or maintenance tasks didn’t affect user-facing responsiveness.

When Should You Use Replicas, Caching, or Rate Limiting?

Each method comes with its own trade-offs and ideal use cases:

- Replicas: Best used to scale read-intensive workloads. They add capacity but increase complexity in synchronization and consistency management.

- Caching: Ideal for reducing latency and query volume on frequently accessed data but requires careful cache invalidation strategies to avoid stale data.

- Rate Limiting: Crucial to protect backend systems under load bursts but can result in rejected requests if thresholds are too low or poorly configured.

In OpenAI’s case, the combination ensured both high availability and data integrity under massive demand.

Common Mistakes in Scaling PostgreSQL

From firsthand experience working on high-scale systems, some pitfalls are often overlooked:

- Ignoring workload characteristics: Treating all queries the same leads to inefficient resource use.

- Over-reliance on replicas: Simply adding more replicas without isolating workloads can cause replication lag and stale data issues.

- Neglecting cache invalidation: Stale caches cause inconsistent user experiences.

- One-size-fits-all rate limiting: Applying uniform rate limits can block important background jobs or slow critical write operations.

Recognizing these issues early enables better architecture decisions and smoother scaling.

What Are the Real Trade-Offs in This Architecture?

Scaling PostgreSQL like OpenAI did forces you to balance complexity, cost, and performance:

- Added replicas improve read capacity but increase infrastructure costs and complexity.

- Caching enhances speed but adds another layer to maintain and troubleshoot.

- Workload isolation avoids resource contention but requires careful orchestration.

- Rate limiting protects stability but risks user dissatisfaction if not finely tuned.

The key takeaway is there is no silver bullet; the architecture must evolve with traffic patterns and user behavior.

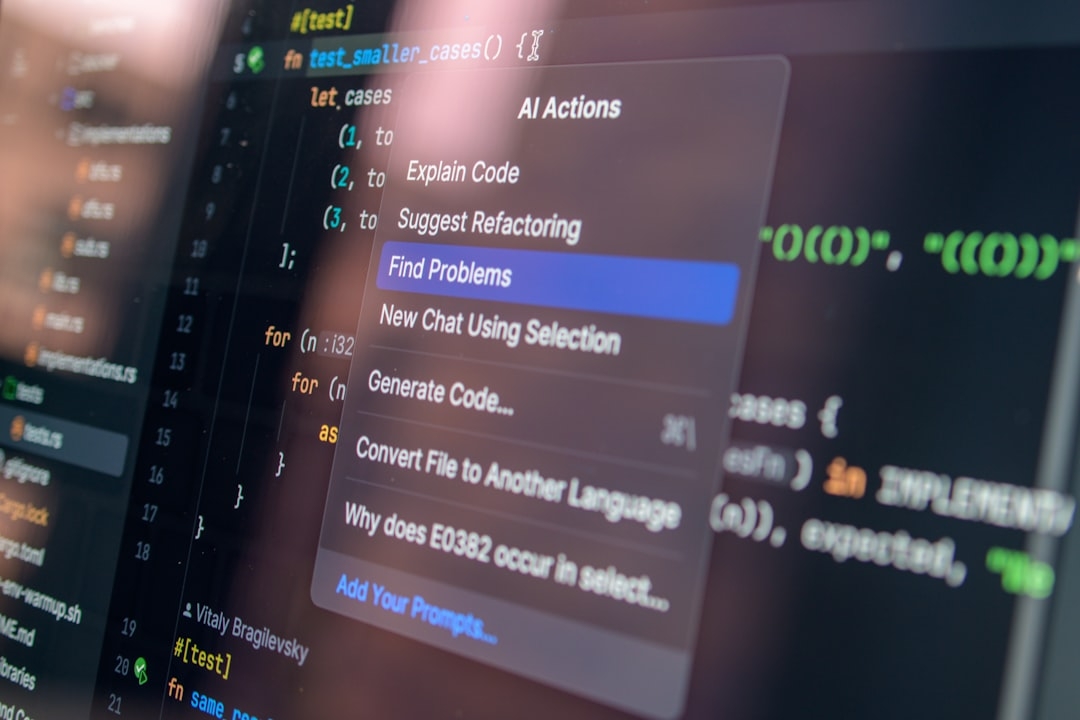

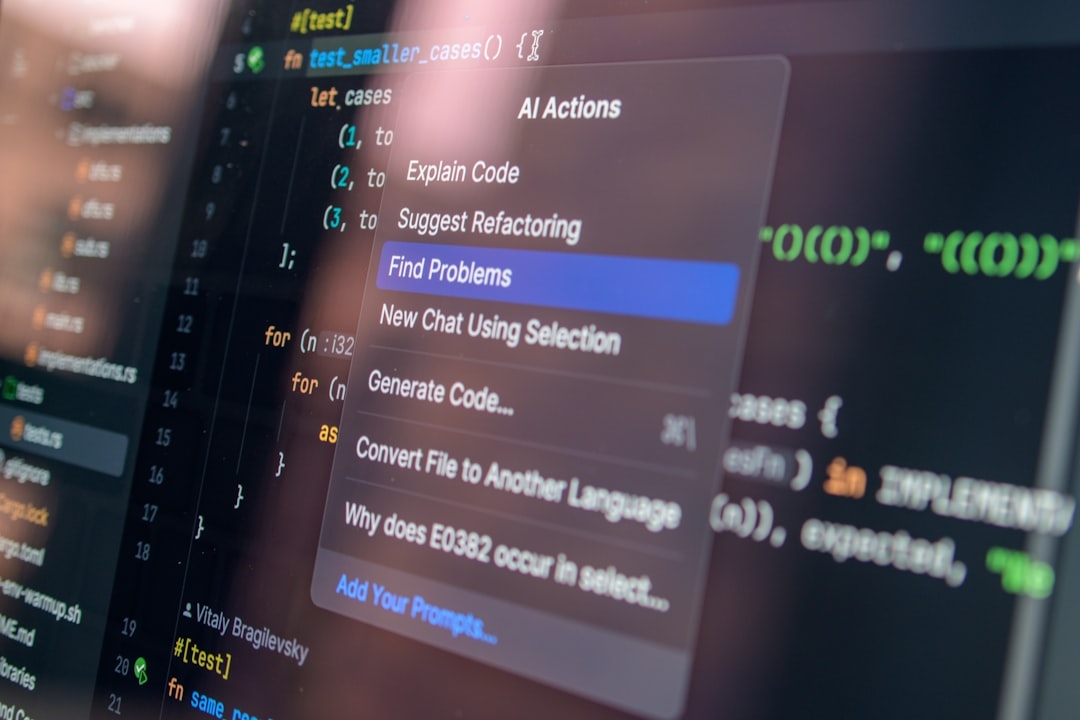

How Can You Experiment with These Concepts?

To better understand these strategies, try simulating a basic PostgreSQL setup with and without caching and rate limiting. For example, you could:

- Set up a PostgreSQL instance and populate it with sample data.

- Create simple client scripts that generate concurrent read and write queries.

- Introduce a caching proxy like Redis to cache frequent read queries.

- Implement rate limiting logic on your client or API gateway.

- Measure how response times and throughput change under growing load.

This hands-on test will reveal how each technique impacts performance and system behavior.

Overall, OpenAI’s story shows that scaling PostgreSQL to support 800 million ChatGPT users demands thoughtful architecture and layered solutions. By combining replicas, caching, rate limiting, and workload isolation, it is possible to achieve high throughput and reliability, albeit with necessary trade-offs and operational attention.

Technical Terms

Glossary terms mentioned in this article

Comments

Be the first to comment

Be the first to comment

Your opinions are valuable to us