Can Simple Ideas Become Cinematic Social Videos?

Turning a basic concept into an eye-catching social video sounds straightforward, but the reality is often complicated and time-consuming. Higgsfield has stepped into this space to bridge that gap by using advanced AI tools like OpenAI's GPT-4.1, GPT-5, and Sora 2, enabling creators to generate cinematic content from simple text inputs. This article examines how Higgsfield's approach fundamentally changes video creation for social platforms.

For creators and marketers, the value lies in producing engaging content quickly without losing cinematic quality. Understanding the technology behind it and the challenges involved offers insight into why Higgsfield's solution is both innovative and practical.

How Does Higgsfield Work to Convert Text into Cinematic Video?

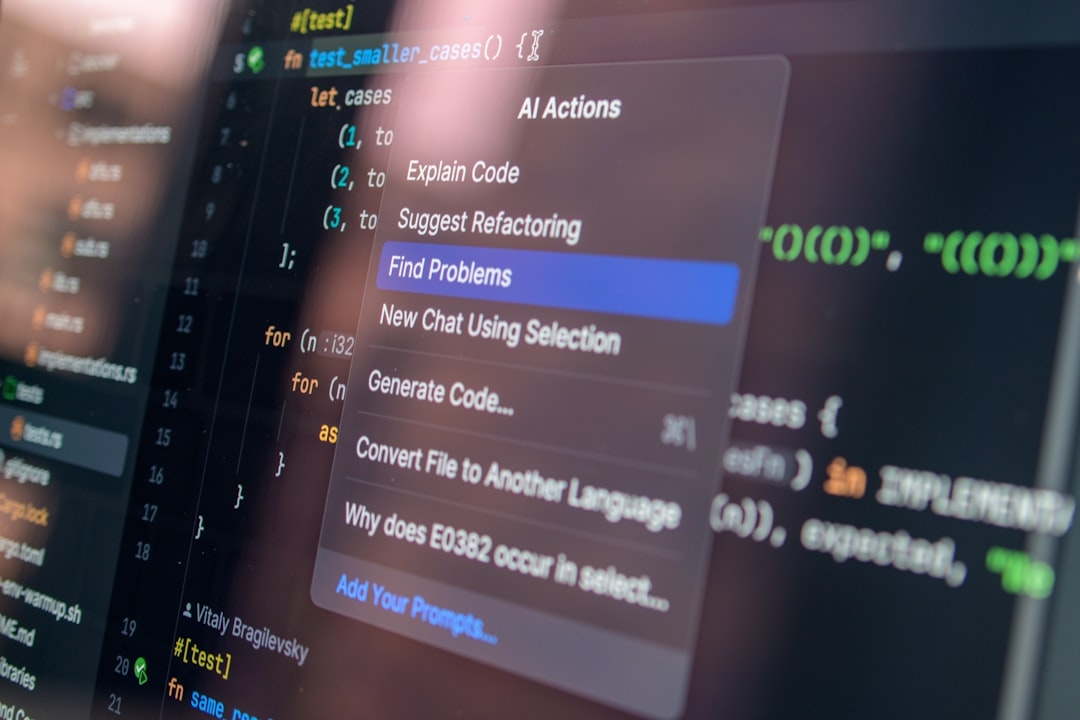

At its core, Higgsfield takes a simple idea, often just a text prompt, and transforms it into a fully formatted, social-first video output designed to feel cinematic. This process relies heavily on three key AI components:

- OpenAI GPT-4.1 and GPT-5: These powerful large language models (LLMs) interpret the input text, expanding on the idea, suggesting script elements, and structuring the narrative to fit social media norms.

- Sora 2: A specialized AI-driven video synthesis engine that converts text narratives, storyboards, or scene descriptions into visually compelling video sequences.

- Workflow Integration: Higgsfield seamlessly integrates these AI components into an automated pipeline that assembles the script, storyboard, and final video with minimal human intervention.

Large Language Models (LLMs) like GPT-4.1 and GPT-5 excel in understanding context, tone, and narrative flow, which are critical to turning skeletal input into a coherent story. Sora 2, on the other hand, empowers the visual realization of that story, playing a similar role to a director or cinematographer but as an AI.

What Makes Higgsfield’s Approach Different From Traditional Video Creation Tools?

Traditional video creation often requires significant manual effort: scriptwriting, storyboarding, filming, editing, and post-production—all resource-intensive steps. Higgsfield disrupts this by automating much of the ideation and production pipeline.

Unlike popular templated editors, Higgsfield focuses on social-first cinematic quality, which means:

- Videos are formatted and optimized for social platforms’ specific requirements (aspect ratios, duration limits, aesthetic styles).

- The AI-generated narrative adapts to emphasizing emotional arcs and storytelling cues that resonate with social audiences.

- Minimal manual tweaks are needed because the content fits platform norms from the start.

In practice, this means creators can input a simple idea like "promote a new product launch" and receive a polished video that feels professionally produced, but without the usual bottlenecks.

When Should You Use Higgsfield for Video Creation?

While Higgsfield excels in rapid, social-first video creation, it’s important to understand scenarios where it’s the best tool.

Optimal use cases include:

- Quick turnarounds for social campaigns where speed and cinematic feel matter.

- Creators lacking extensive video editing experience who need visually engaging outputs.

- Brands wanting consistent styling across multiple video assets without managing an extensive production pipeline.

However, for highly customized or narrative-heavy productions requiring precise creative control, traditional video production still holds advantages. Higgsfield streamlines and democratizes a significant slice of the social video creation space, but it’s not a one-size-fits-all solution.

What Are the Core Implementation Considerations?

Deploying Higgsfield or a similar AI-driven pipeline involves balancing AI sophistication, workflow automation, and content quality. Key considerations include:

- Input Quality: Simple ideas must be clear enough for AI to interpret. Ambiguous prompts can lead to unintended outputs.

- Model Coordination: GPT-4.1/5 handle textual narrative, while Sora 2 manages video synthesis. Synchronizing outputs requires fine-tuning and validation.

- Computational Resources: Both LLMs and video synthesis engines demand high computational power, especially at scale.

- User Interface: The platform must simplify complex AI processes, offering creators intuitive controls without overwhelming technical details.

Managing these factors is where Higgsfield shows maturity, offering a stable platform rather than experimental tech that fails in production environments.

What Challenges Might Arise in Production?

Despite its promise, using Higgsfield or similar AI tools in real-world production comes with trade-offs:

- AI Hallucinations: GPT models may generate plausible but inaccurate script elements, requiring oversight.

- Consistency in Visual Style: Automated video synthesis can sometimes struggle to maintain consistent cinematic aesthetics across scenes.

- Customization Limits: While automation speeds workflow, creators may find limited options to tweak artistic elements compared to manual editing.

- Platform-Specific Constraints: Social media platforms evolve; ensuring videos continuously meet changing specs demands constant updates.

Understanding and preparing for these challenges helps set realistic expectations and maximizes the value derived from Higgsfield.

How Can Creators Evaluate Whether Higgsfield Fits Their Needs?

Before committing, creators should perform a quick evaluation framed around three criteria:

- Speed vs. Control: Is fast production prioritized over deep customization?

- Audience Engagement: Does the AI-generated cinematic style match your target audience’s expectations on social platforms?

- Resource Availability: Do you have the technical infrastructure and budget to support an AI-driven video creation workflow?

Testing a pilot project using a simple prompt and analyzing output quality and workflow efficiency is a practical next step.

Quick 3-Step Evaluation Checklist

- Draft a clear content idea and input it into Higgsfield.

- Review the generated script and video for coherence and cinematic feel.

- Assess if turnaround time and effort saved align with your creative goals.

Summary of Higgsfield's Impact on Video Creation

Higgsfield leverages cutting-edge AI tools—GPT-4.1, GPT-5, and Sora 2—to revolutionize how creators turn simple ideas into polished, cinematic social videos. By automating narrative generation and video synthesis, it offers significant speed and accessibility advantages.

While not a wholesale replacement for traditional video production, Higgsfield shines in scenarios demanding rapid, social-optimized content. Understanding its trade-offs and testing it against your unique needs ensures it adds meaningful value to your creative workflow.

Next Steps for Creators

Use the provided evaluation framework to quickly assess if Higgsfield can streamline your social video creation. A 10-20 minute trial can reveal if this AI-powered approach is a good fit or if more conventional methods remain better suited for your projects.

Technical Terms

Glossary terms mentioned in this article

Comments

Be the first to comment

Be the first to comment

Your opinions are valuable to us