You probably assume that when your food delivery app shows a picture of your order at your doorstep, it's the real deal. It’s a small but crucial part of the trust we place in these services. But what if that photo was a clever fabrication, generated not by a camera lens, but by an artificial intelligence algorithm? That's exactly the scenario that has shaken the gig economy recently, with DoorDash confirming a ban on a driver accused of faking deliveries using AI-generated imagery.

This isn't just a minor glitch; it's a stark illustration of how rapidly evolving AI technology can be weaponized to exploit existing systems. While AI offers incredible potential for innovation, it also presents new avenues for fraud and deception that platforms must urgently address. The story of this DoorDash driver, which went viral, highlights a critical blind spot in current verification processes.

Overview: The AI Deception Uncovered

The incident reportedly involved a DoorDash driver who, instead of physically delivering food, allegedly submitted AI-generated images as proof of completion. These sophisticated fake images likely mimicked real delivery scenarios, fooling the system and potentially leading to payments for deliveries that never actually occurred. The story gained significant traction on social media, sparking widespread discussion and concern among both customers and other gig workers.

DoorDash, in response to the viral claims, confirmed that they had taken action, stating that the driver in question was banned from the platform. This confirmation, while necessary, underscores the challenges these delivery giants face. Detecting AI-generated content, especially when it's designed to be indistinguishable from reality, is a complex technical problem.

Approach A: How AI Can Forge Deliveries

The core of this deception lies in the capabilities of modern AI image generation models. Tools like Midjourney, DALL-E, and Stable Diffusion can create photorealistic images from text prompts. For a malicious actor, this means they could potentially generate images that look like:

- Your food bag sitting on a doorstep, complete with a house number and mailbox.

- A generic hand holding a takeout container.

- A convincing background that matches the typical suburban or urban environment.

The AI doesn't need to be trained on specific DoorDash delivery photos; it can synthesize plausible scenarios based on vast amounts of general visual data. The challenge for platforms like DoorDash isn't just identifying an image, but determining if that image was *created* by an AI specifically to deceive.

Approach B: The Arms Race Against AI Fraud

DoorDash and other gig economy platforms rely on a mix of automated systems and human oversight to verify deliveries. When a driver marks an order as complete, they might be prompted to upload a photo. This photo is then often checked against various parameters:

- Location data: Does the photo's metadata (if available and not stripped) match the customer's address?

- Image quality and consistency: Does it look like a legitimate photo taken by a smartphone?

- Specific order details: Does the photo show the correct bag or food items?

However, AI generation tools are becoming increasingly adept at mimicking these very characteristics. They can embed falsified metadata, produce high-quality images, and even generate images that subtly incorporate order details if prompted correctly. This creates an ongoing 'arms race' where detection methods must constantly evolve to stay ahead of AI's generative capabilities.

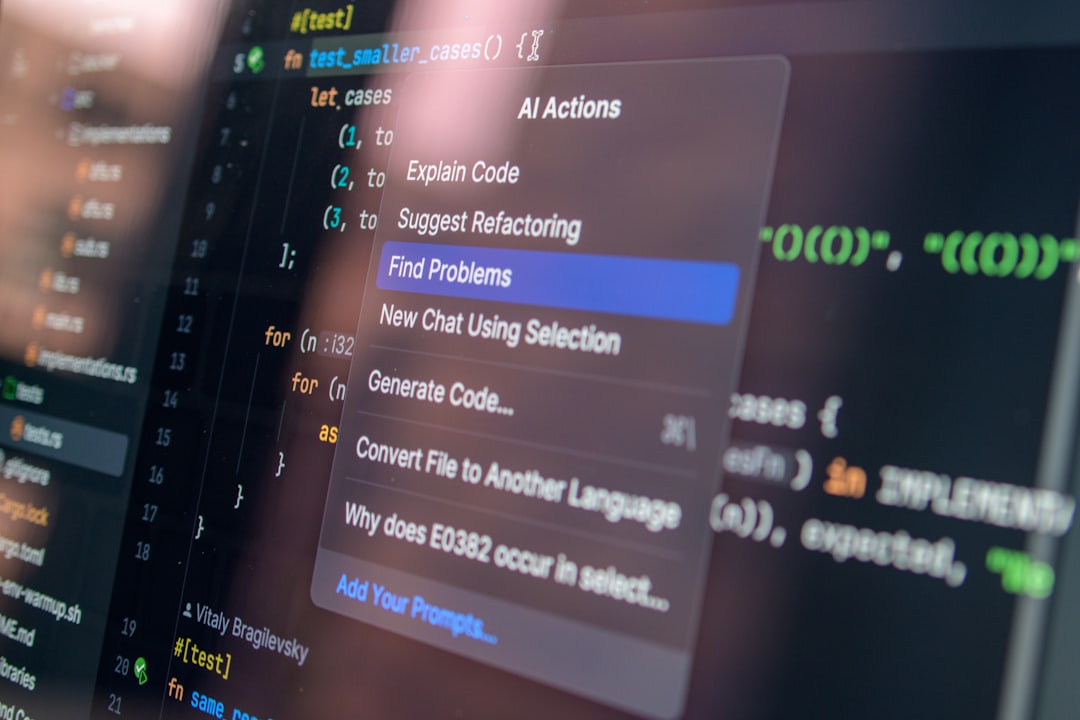

AI Detection Tools: The Next Frontier

The detection of AI-generated images is a rapidly developing field. Sophisticated AI models are being trained to spot subtle anomalies or patterns characteristic of AI synthesis, such as:

- Unnatural textures or lighting.

- Inconsistent details (e.g., too many fingers, distorted faces if present, repetitive patterns).

- Digital artifacts or 'fingerprints' left by the generative model.

These tools are not perfect. Generative AI is also improving at evading detection. For platforms, integrating these detection mechanisms into their workflow adds complexity and computational cost. It’s a constant cat-and-mouse game, where staying ahead requires continuous investment in R&D and advanced analytics.

When to Use Each Approach (and When Not To)

In the context of delivery verification, the 'approaches' aren't about choosing between two methods, but rather about implementing a robust, multi-layered defense. Relying solely on one method is a recipe for disaster, especially with the sophistication of AI.

The Flawed Reliance on Simple Photo Verification

The fundamental flaw in systems that *only* rely on a driver uploading a photo is that they are trusting the input implicitly. If the input can be faked, the system is compromised. This is like a security guard only checking if someone has a uniform, without verifying if they are the actual authorized personnel.

The Necessary Multi-Layered Defense

A truly secure system needs to go beyond just the image. Here's what a more robust approach looks like:

- **Real-time Location Tracking:** Continuous GPS tracking of the driver's route provides undeniable proof of presence at the delivery location. This is a strong deterrent and verification method.

- **Advanced AI Image Analysis:** Implementing cutting-edge AI models specifically designed to detect AI-generated content. This isn't just about *if* it's an image, but *how* it was created.

- **Behavioral Analysis:** Monitoring driver behavior patterns. Are they taking unusually long routes? Are they consistently marking deliveries complete in a specific, potentially automated way?

- **Customer Feedback Integration:** Empowering customers to report discrepancies. While not a primary detection method, it can flag suspicious patterns for further investigation.

- **Contextual Data Correlation:** Correlating photo metadata (if available) with GPS data, time stamps, and route information. An AI image might lack genuine, verifiable metadata.

The key is not to pick one method, but to layer them. Think of it like a digital castle with multiple walls and watchtowers. One might be breached, but the others are still holding the line.

Common Mistakes and How to Avoid Them

Seeing this incident unfold offers valuable lessons for any platform operating in the gig economy, or indeed any system that relies on digital verification. There are common pitfalls that, if avoided, can significantly strengthen security against AI-driven fraud.

Mistake 1: Over-reliance on a Single Verification Point

The most glaring mistake is trusting a single piece of evidence. If your system’s primary (or only) check is an uploaded photo, it's fundamentally vulnerable. Imagine a bank only asking for a password and nothing else – that wouldn't last long. The DoorDash case shows that even when photo evidence is presented, if it's a convincing fake, the system can be bypassed.

Mistake 2: Underestimating Generative AI's Capabilities

There's a tendency to think AI detection is 'solved' or that current tools are foolproof. This is a dangerous assumption. Generative AI is evolving at an unprecedented pace. What works today might be obsolete tomorrow. Platforms must continuously research and integrate the latest AI detection techniques, not treat it as a one-time implementation.

Mistake 3: Slow Response to Viral Incidents

While DoorDash did ban the driver, the initial confirmation came after the story went viral. Proactive communication and swift action build trust. Platforms need robust internal mechanisms to detect and respond to such issues *before* they become viral sensations. This involves empowering fraud detection teams with the right tools and data.

Hybrid Solutions: The Path Forward

The future of reliable delivery verification will undoubtedly involve hybrid solutions that combine multiple layers of security. We're not talking about a single magic bullet, but a symphony of technologies working in concert.

This means:

- **Combining Geolocation with Image Analysis:** The most direct way to counter AI fakes is to ensure the photo was taken at the correct location. This requires tight integration between GPS data and the image upload process.

- **Leveraging Biometrics (with caution):** While controversial, future solutions might involve driver-facing biometrics for certain high-risk scenarios, or even verifying the device used for the delivery.

- **Developing Decentralized Verification:** Exploring blockchain or distributed ledger technologies could create immutable records of deliveries, making it harder to tamper with proof.

- **Human-in-the-Loop Systems:** While automation is key for scale, having trained human analysts review flagged deliveries or patterns can catch nuanced deceptions that AI might miss.

Ultimately, the DoorDash AI fake delivery incident is a wake-up call. It demonstrates that as AI capabilities grow, so must our defenses. Platforms can't afford to be complacent; they need to invest heavily in robust, multi-faceted verification systems that can adapt to the ever-changing landscape of technological deception. For consumers, it means being aware that the digital world, even in simple confirmations like a delivery photo, is becoming increasingly complex to navigate.

Comments

Be the first to comment

Be the first to comment

Your opinions are valuable to us