The Problem with Non-Deterministic LLMs

Large Language Models (LLMs) have revolutionized the field of artificial intelligence. However, their non-deterministic nature makes them notoriously difficult to debug. This can be a major headache for developers, who often struggle to identify the root cause of errors.

In this article, we'll explore the concept of Deterministic AI and how it can help make LLM systems debuggable again. We'll also take a closer look at the current state of the field and discuss some of the challenges that researchers and developers are facing.

What is Deterministic AI?

Deterministic AI refers to a type of artificial intelligence that is based on deterministic principles. In other words, it's AI that always produces the same output given a particular input. This makes it much easier to debug and understand than non-deterministic AI.

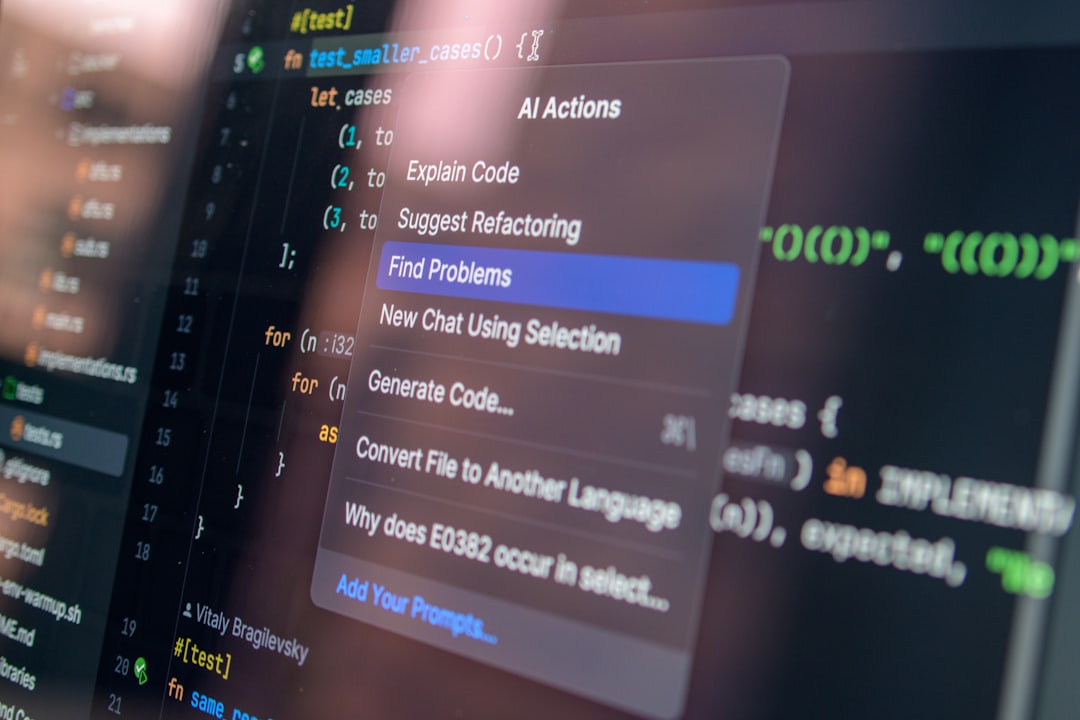

def deterministic_ai(input):

return outputOne of the key benefits of Deterministic AI is that it allows developers to predict the behavior of AI systems. This makes it much easier to debug and optimize AI models, which can lead to significant improvements in performance and accuracy.

How Deterministic AI Can Make LLM Systems Debuggable Again

So how can Deterministic AI help make LLM systems debuggable again? There are several ways in which this can happen:

- Predictable behavior

- Easier debugging

- Improved performance and accuracy

Overall, Deterministic AI has the potential to revolutionize the field of AI development. By making AI systems more predictable and easier to debug, it can help developers build more accurate and reliable AI models.

Conclusion

In conclusion, Deterministic AI has the potential to make LLM systems debuggable again. By making AI systems more predictable and easier to debug, it can help developers build more accurate and reliable AI models.

Comments

Be the first to comment

Be the first to comment

Your opinions are valuable to us